SeismoStorm

The SEISMOSTORM project is a Belgian project funded by Belspo (the Belgian Science Policy Office, BRAIN-be 2.0) involving the Royal Observatory of Belgium (ROB) and the Université Libre de Bruxelles (ULB) to focus on Making Analog Seismograms Findable, Accessible, Interoperable, and Reusable (F.A.I.R.). The project also emphasizes the importance of re-analyzing legacy seismic data to study the last century’s oceanic climate. The collaboration of two disciplines, ocean wave modeling and seismology, and the scientific potential that resulted led to the project’s name, SEISMO-STORM.

Background

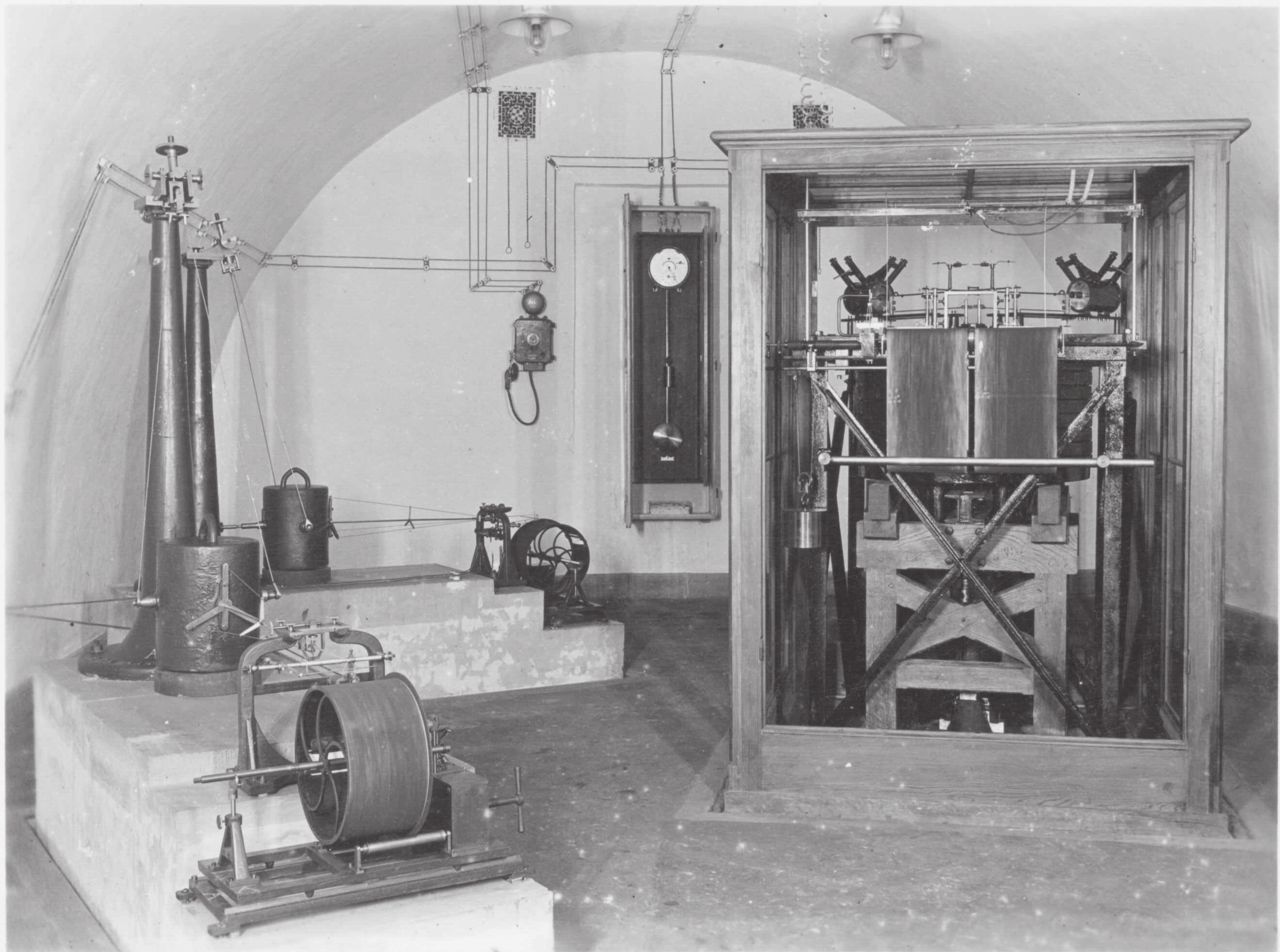

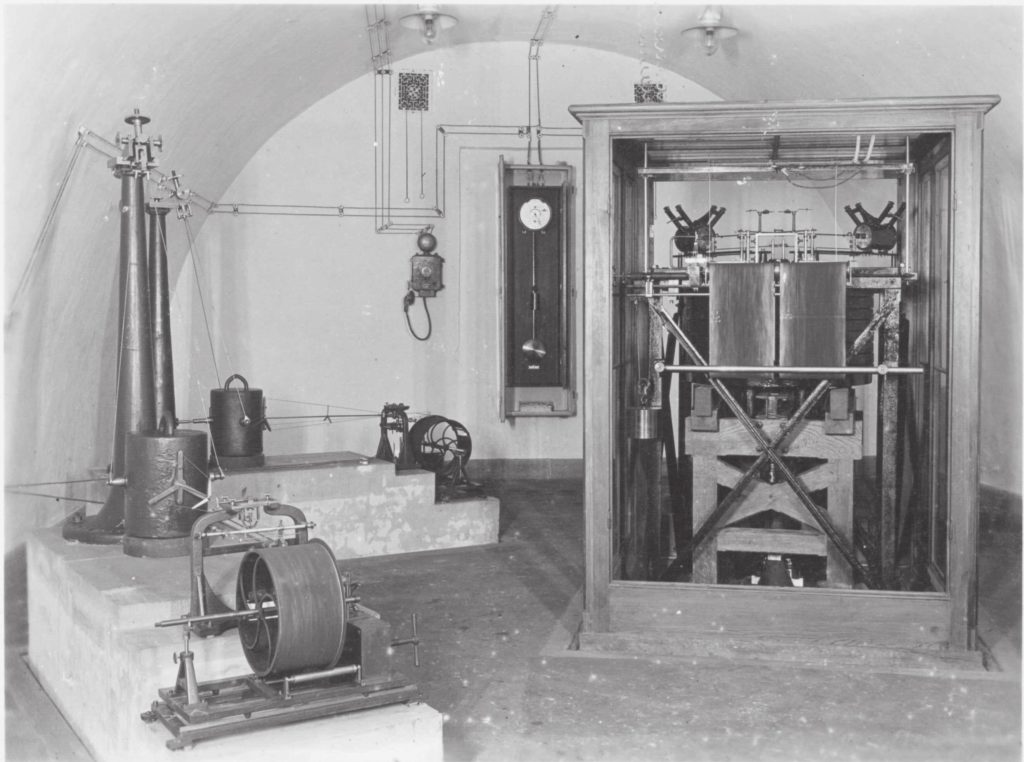

Eugène Lagrange installed the first Belgian analog seismic station at the ROB in Uccle in 1898. Until the 1970s, seismic records typically used ink on white paper, scratching black-smoked paper, or light on photographic paper. Analog seismic records are now stacked and archived in boxes in most observatories around the world, potentially exposing them to physical decay and permanent loss.

The scientific community has a significant interest in preserving parts of those continuous records, especially major earthquakes, by scanning and digitizing them. Digitizing analog paper seismic data not only preserves the scientific legacy but also allows for new research to be made possible by bringing historic data to the digital age.

Over the past 15 years, a global strategy has developed to make high-quality continuous seismic data FAIR by making them publicly accessible through global FDSN servers. Through the implementation of these FAIR principles, historical analog seismic data will be made as open, usable, and accessible as contemporary seismic data. To this end, the development of image processing and machine learning methodologies allows digitizing the waveforms that, in turn, are converted into calibrated and time-coded seismic time series that will become publicly available on international web services.

Proof of concept

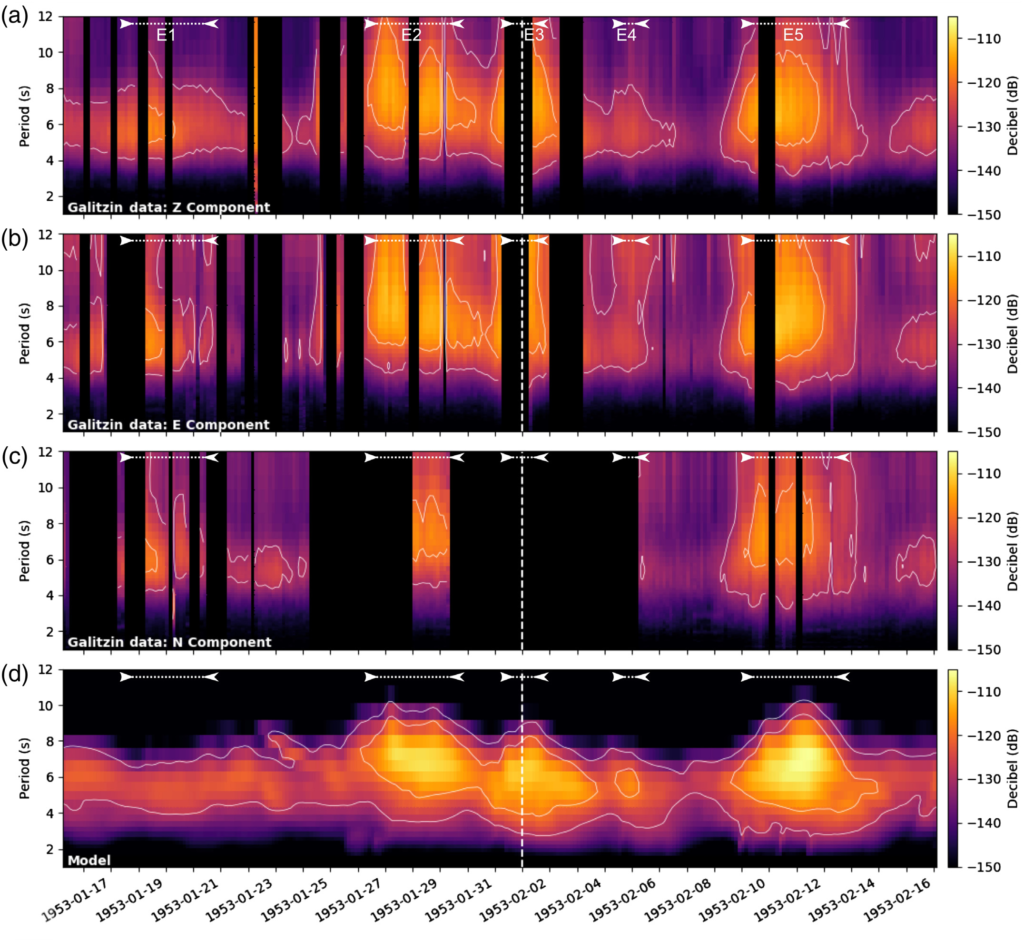

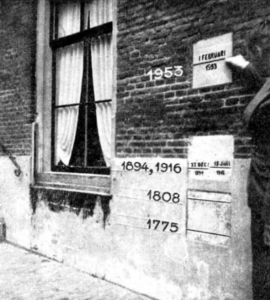

The project builds upon the outputs of Lecocq et al. 2020 (SRL). They used digitized records of Galitzin seismograms from the historic Uccle station to analyze the ground motion generated by the microseisms associated with the 1953 “Big Flood“, a severe Atlantic storm that caused major flooding and historic damages to the Netherlands, north-west Belgium, England, and Scotland. The paper also relied on Oceanic models to validate the digitized waveforms and established the foundations of the strategy later laid out in the SeismoStorm project to make the Legacy seismic data of the ROB FAIR.

Objectives

The SEISMOSTORM project aims to make the ROB’s analog seismic data openly available to the seismological community following the community-defined storing standards for digital seismic data. This is achieved by developing image processing and machine learning methodologies to digitize the waveforms from scanned seismograms and transform them into calibrated and time-coded seismic time series. Those time series require accurate instrument responses to be useful for digital seismology. All the information and metadata about all ROB historical seismic instruments are compiled from historical seismic bulletins to construct the corresponding frequency/amplitude instrument response functions with the objective to match modern standards for digital data. The digitized data is then validated by extracting the microseismic ground motion from analog seismograms and comparing it to the theoretical microseismic ground motions in Uccle from atmosphere-ocean-solid earth coupling mechanisms. This is done for different periods of time in the last century chosen to include significant storm periods in the shallow waters of the Southern North Sea likely to exhibit strong changes of amplitudes and oscillation periods on the analog records. The “rescue” process of the digitized data is then eventually completed by making them publicly available on international web services where they can be further valorized.

Methodology

The SeismoStorm project is divided into 4 key modules:

- Frequency/amplitude instrument response functions determination

- Developing new tools for digitizing analog seismograms

- Validating time series with modeled microseisms

- Making time series available through web services

The project will bring century-old analog seismic data and metadata compliant with modern standards by bridging two domains of expertise, namely seismology and machine learning. The research is divided into four steps. Identify the characteristics and the typical metadata information from the different types of instruments Forward modeling physically-bounded wiggles Develop image processing and machine learning techniques to identify and characterize the seismic traces from scanned analog seismograms Compare the recovered seismic traces with modeled atmosphere-ocean-solid earth generated microseisms for major storms during the last century (1) Analog instruments were mostly displacement sensors that recorded ground motion by moving a beam of light above a photographic paper or by moving a pen on top of a smoked paper. The instruments, with well-established frequency-amplitude responses, were calibrated by the operators, and their calibration factors were compiled in official Bulletins. We will compile all the information about all ROB historical seismic instruments to reconstruct the instrument changes through time using existing tools to compute the instrument responses (e.g., Obspy, Evalresp). The instrument response functions will be expressed in poles-and-zeros to match modern instruments. (2) The instrument responses of the different instruments will be applied to current-day seismic observations to simulate what they should have recorded if they would still be running today. This will help provide realistic synthetic time series as templates/training sets of valid data for machine learning in the next step. (3) The waveforms will be automatically extracted from the scanned seismograms using machine learning using a coarse-to-fine approach to rapidly detect and score possible data issues. The methods used will range from classical image processing techniques to high-level machine learning such as deep neural networks using supervised examples and data augmentation. The analysis will focus on specific features available in the ROB data such as the timing features and the calibration sequences that in turn will be transformed into associated metadata. The quality score produced at each step of the image analysis will also be added as metadata. All the extracted segments, augmented by their metadata, in particular, their timing descriptor will feed a time-series database for further analysis. (4) The validation of the digitized time series will be done by computing the theoretical microseismic ground motion in Uccle generated in the last century by significant storms in the shallow waters of the Southern North Sea. The microseism model used is based on the WAVEWATCH III wave model and is a combination of a numerical wave model and a transformation of wave spectra into microseisms. The comparison between modeled and digitized data is then based on tri-hourly model spectra from which the dominant period of oscillation can be extracted, as well as the root mean square of the ground motion using Parseval’s identity.

Expected results, impact, and valorization perspectives

The final dataset will become the first to follow the FAIR principle for analog seismograms, resulting in the preservation of this scientific legacy, but also its valorization with scientists having access to it worldwide and at all times. The high standards used at the different steps of the project will also guarantee a wide range of potential scientific applications previously impossible for this type of analog data. The tools and methodologies developed in this project will be available to be implemented at other places interested in the digitization of analog records, seismic, or others. Seismic data originating from analog records are currently the only non-meteorological data available for XXth century atmosphere-ocean-solid earth climate reanalysis. The digitalization of ~80 years of analog records from different locations such as historical observatories could therefore help track storms and extreme events from the pre-satellite era. This will bring new hindsight into how these historical events evolved in the North Atlantic Ocean and help prevent future floods in the context of global sea-level rise and local coastal subsidence.